- pub

Optimized OneTrainer Settings and Tips for Flux.1 LoRA and DoRA Training (20% Faster)

Preparing for Training

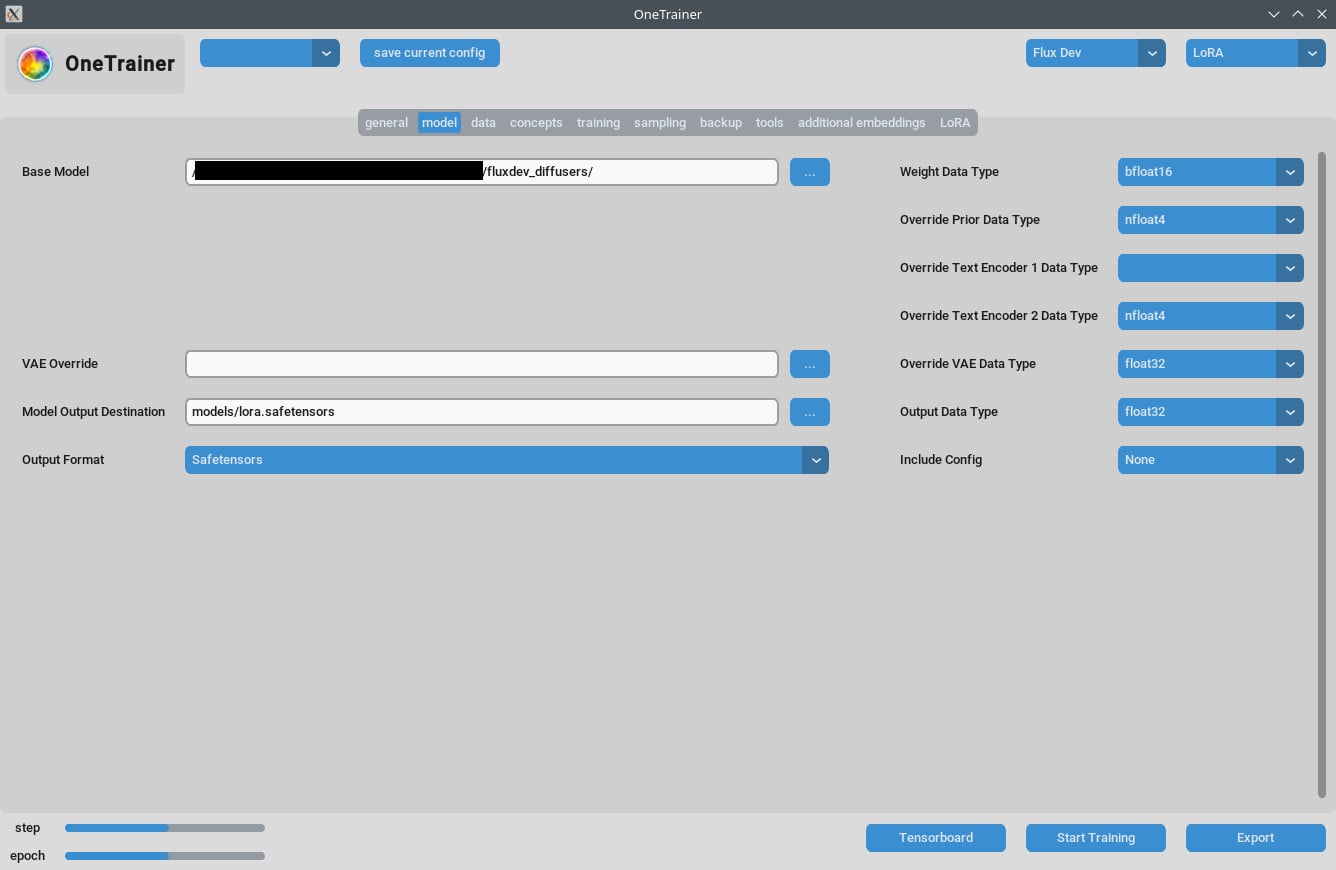

Selecting the Model

First, make sure you've selected the correct Flux AI model. OneTrainer supports various models, including Flux.1 dev, pro, and schnell. Ensure you're using a model that's suitable for your project.

- Acquire the Model: Download the desired model from the official sources, like Hugging Face.

- Load the Model: In OneTrainer, navigate to the model settings and load the model files.

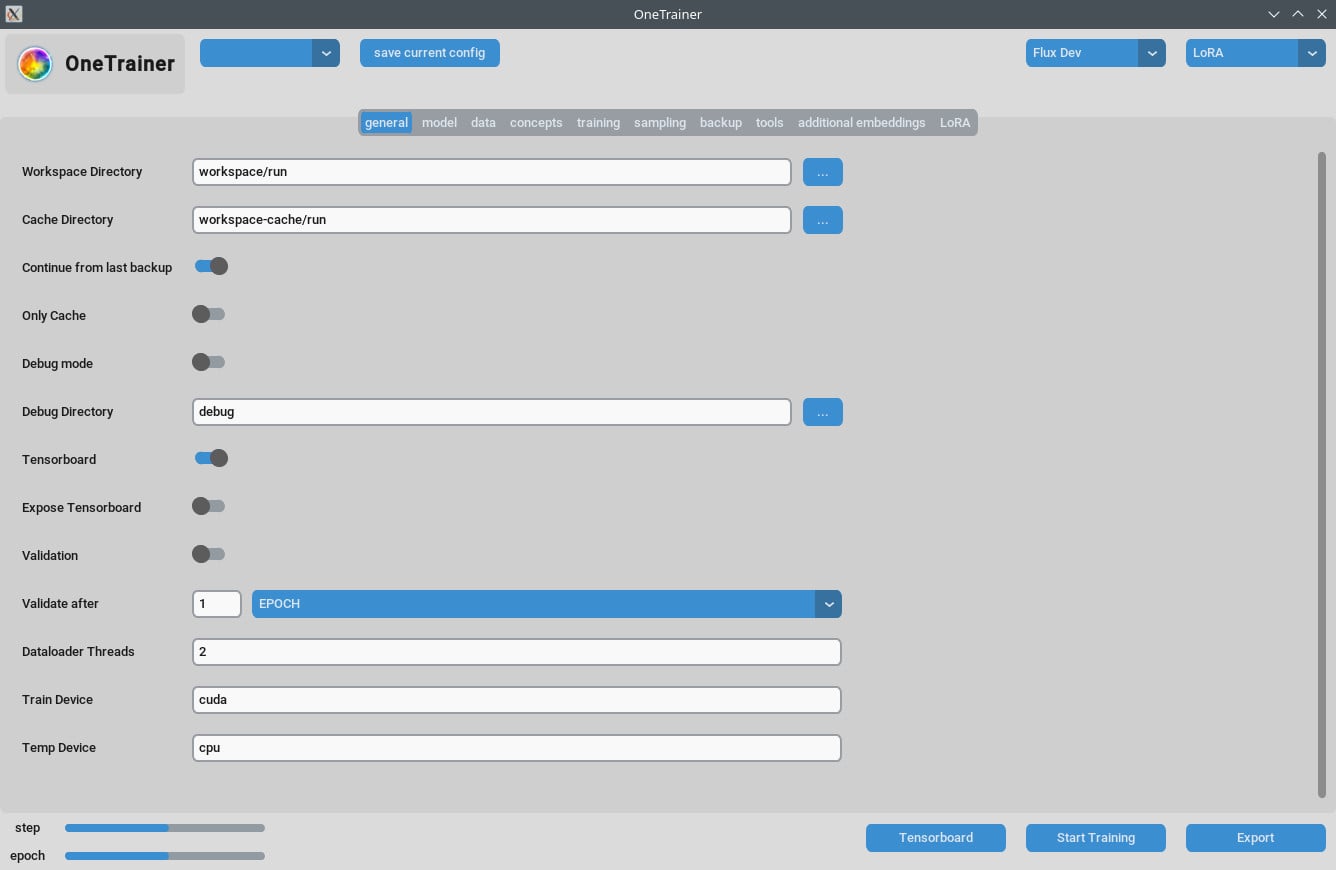

Setting Up the Environment

Hardware Requirements:

- GPU: At least a 3060 is recommended. Training on a 4090 will yield better performance.

- VRAM: At least 12 GB to handle higher resolutions.

- RAM: A minimum of 10 GB RAM is advisable, with higher being preferable.

Software Requirements:

- Operating System: Tested on both Windows and Linux.

- Dependencies: Ensure all dependencies are installed. Check the OneTrainer's documentation for a list of required libraries and tools.

Detailed Settings and Configurations

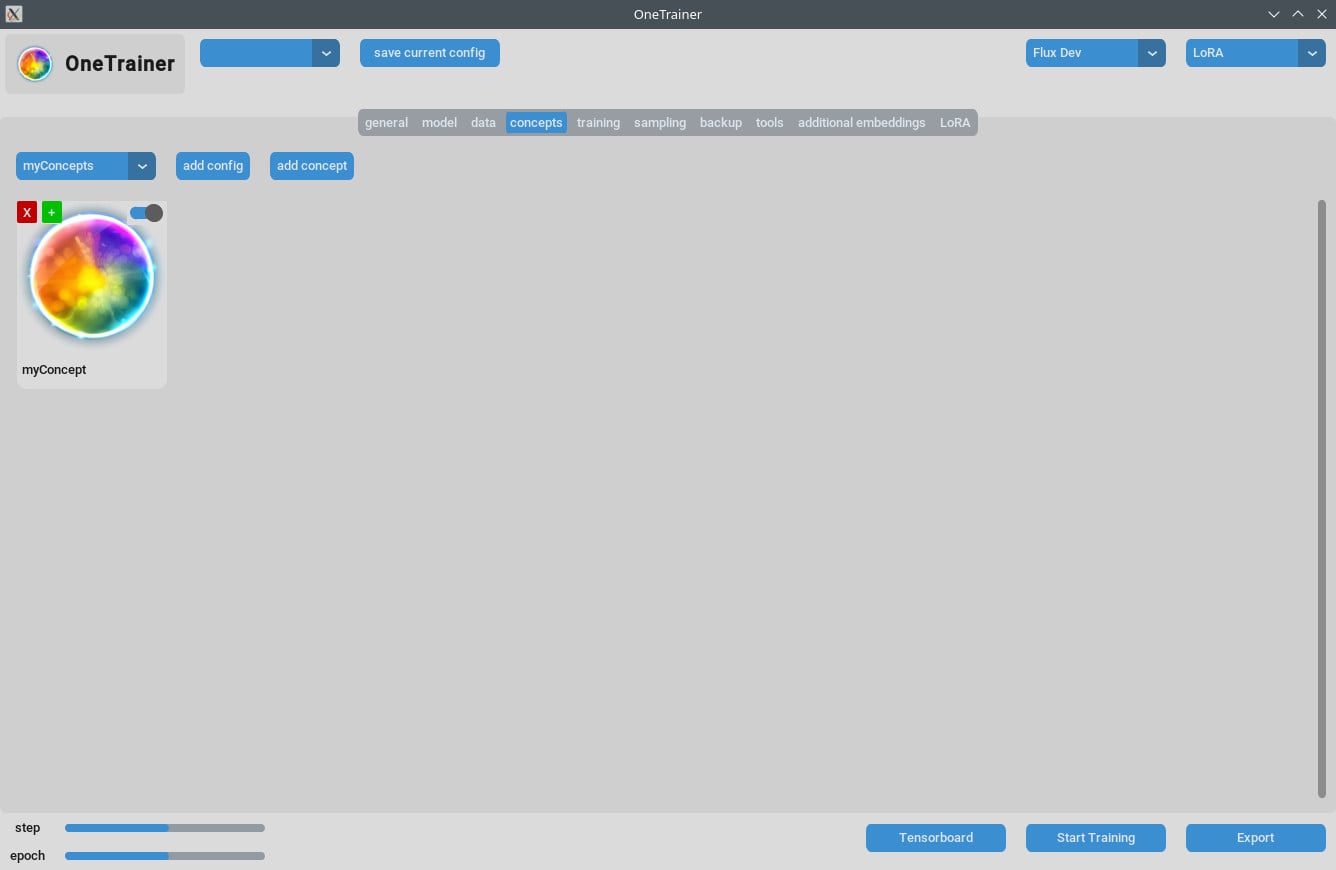

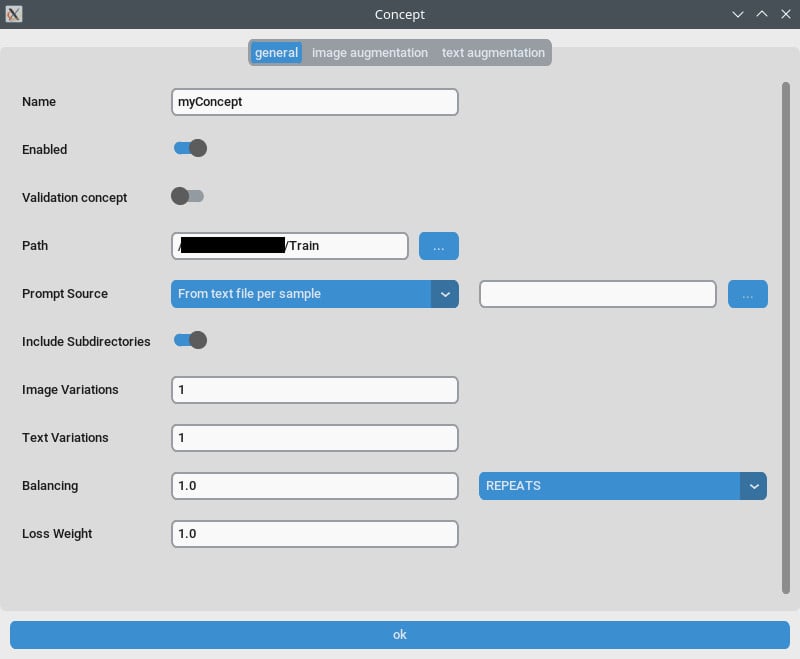

Concept Tab/General Settings

- Repeats: Set

Repeatsto 1. Manage the number of repeats via theNumber of Epochson the training tab. - Prompt Source: Use "from single text file" if you want a "trigger word" instead of individual captions per image. Point this setting to a text file containing your trigger word/phrase.

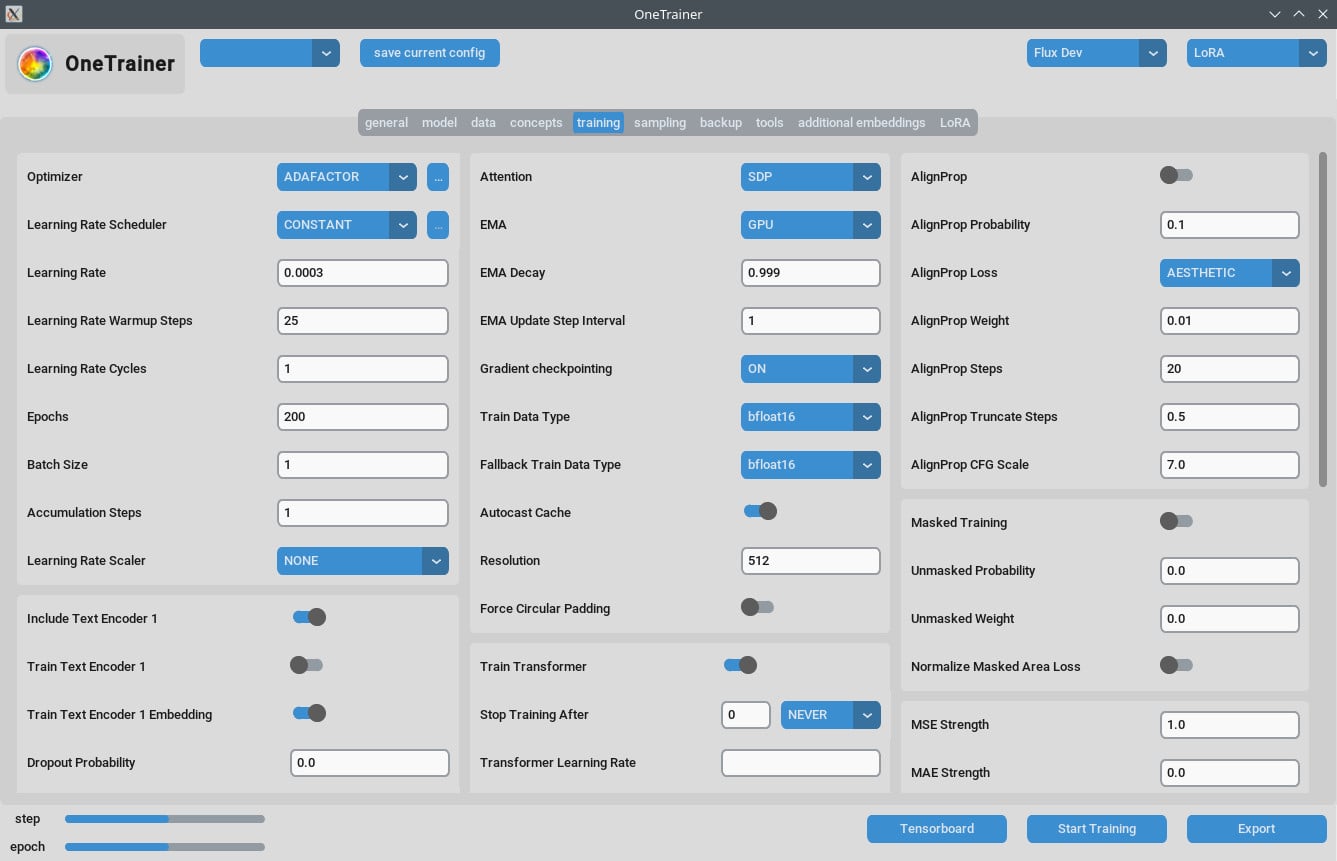

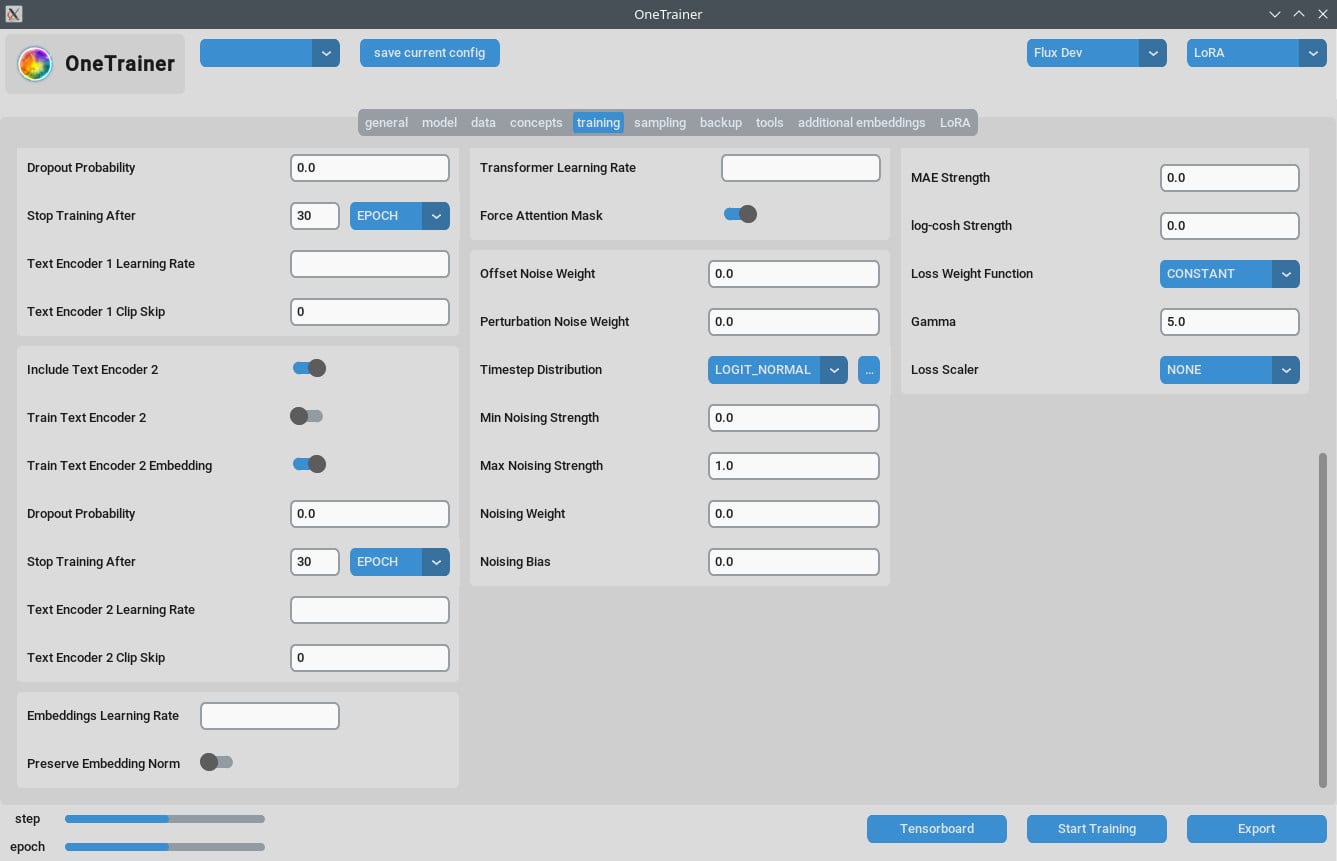

Training Tab

Resolution Settings

- For Better Quality:

- Set

Resolutionto 768 or 1024 for higher quality outputs.

- Set

- EMA Settings:

- EMA: Use during SDXL trainings.

- EMA GPU: To save VRAM, set EMA from "GPU" to "OFF."

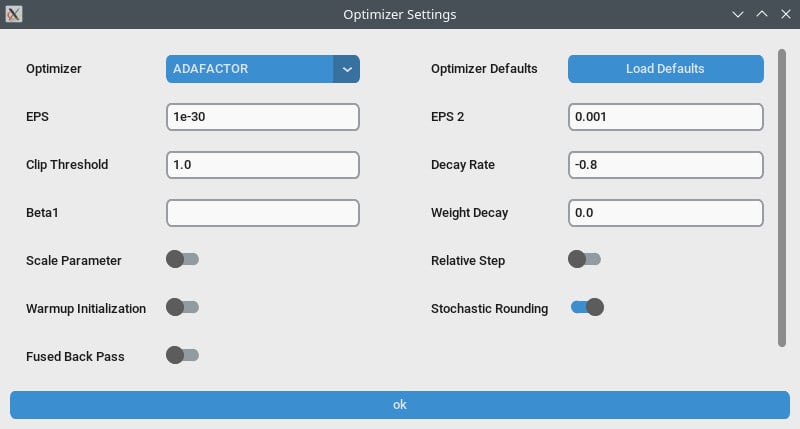

- Learning Rate:

- A starting point could be 0.0003 or 0.0004. Adjust according to your specific needs.

- Number of Epochs:

- Typically, 40 epochs show good results. Adjust based on your dataset's complexity.

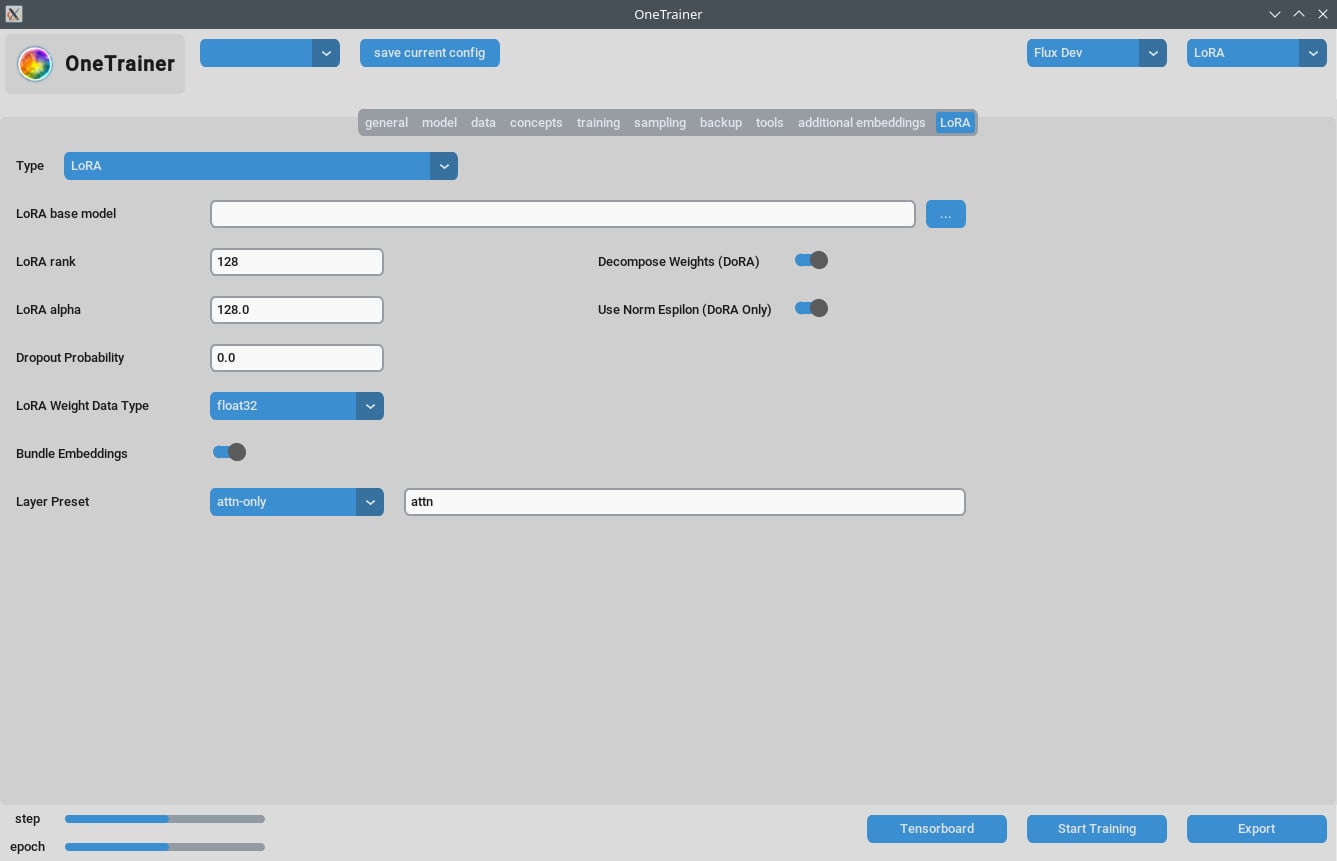

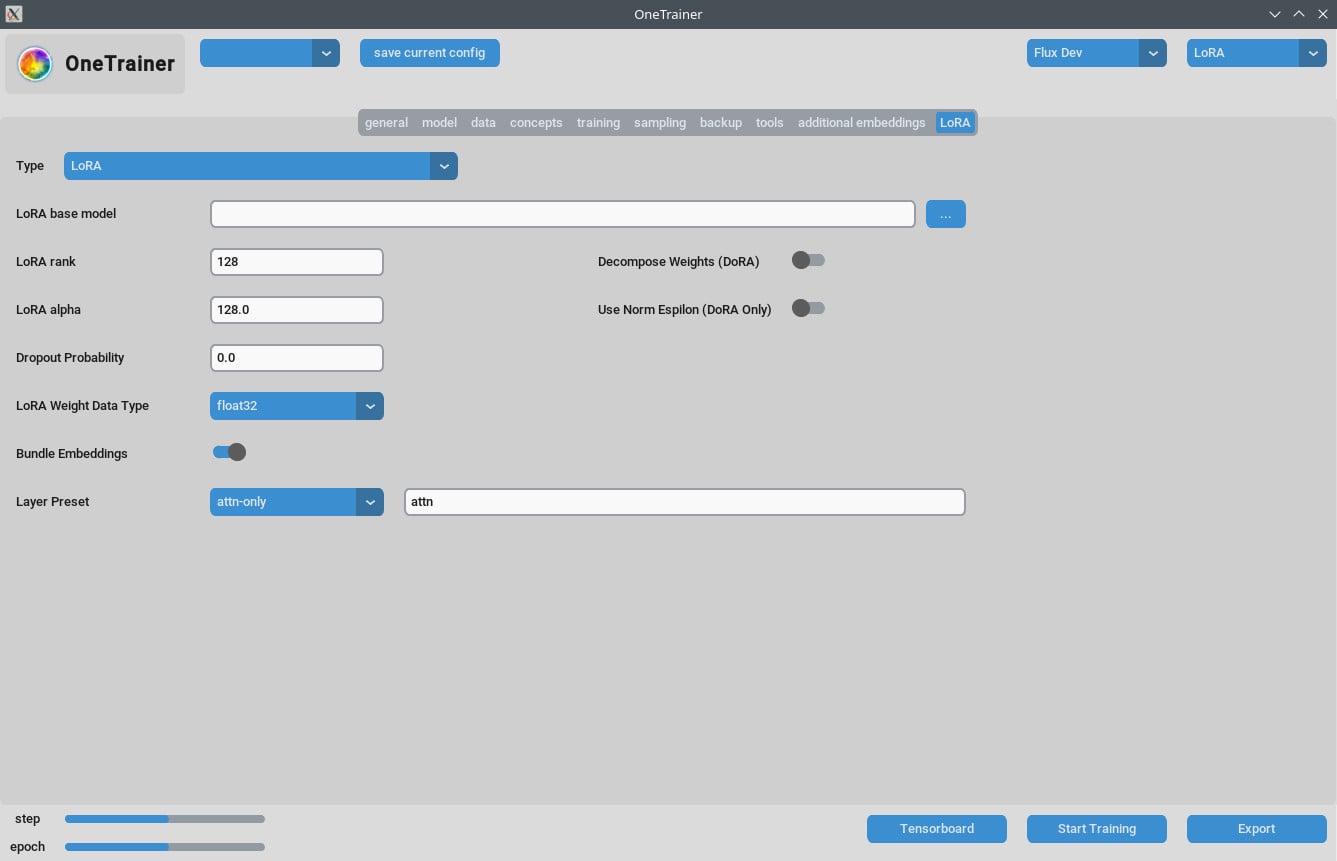

LoRA Tab

- Rank and Alpha:

- Keep these values the same (e.g., 64/64, 32/32) or adjust your learning rate accordingly.

- Resulting LoRA Models:

- Ensure updates are applied if using in recent ComfyUI versions.

Performance Optimization

- Gradient Checkpointing:

- Try deactivating this if you experience slow speeds, particularly if your hardware supports higher VRAM.

- bf16 vs nfloat4:

- In the "model" tab, switch

Override Prior Data Typeto bf16 to potentially increase quality. This setting impacts VRAM and speed.

- In the "model" tab, switch

Handling Sampling Issues

- Some users experienced Out Of Memory (OOM) errors during sampling. Ensure your GPU has sufficient VRAM.

- Regularly update OneTrainer to incorporate bug fixes and patches that address such issues.

Tips for Multi-Concept Training

Current Limitations

- Training multiple people on different trigger words in the same session often fails.

- Different objects or situations (e.g., training shoes and specific cars) work better.

Best Practices

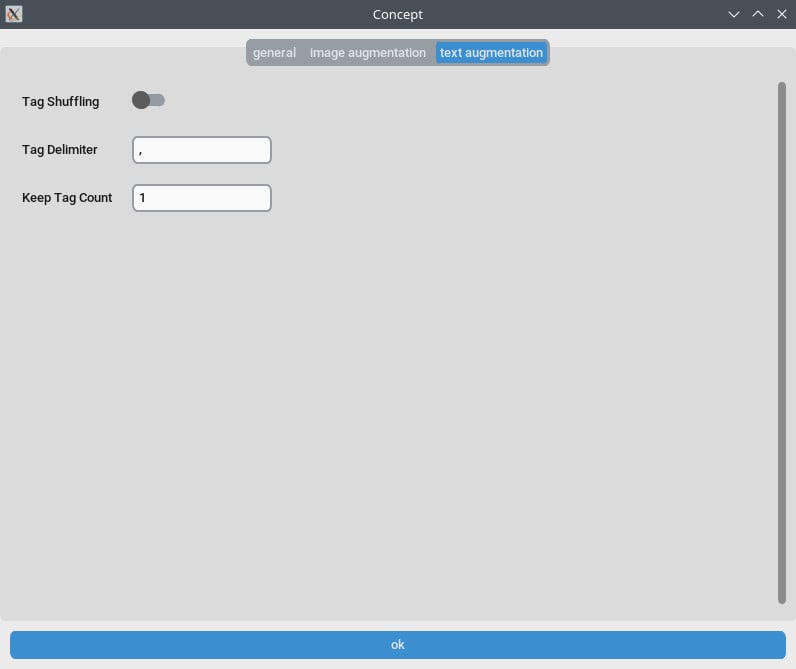

- Short Captions:

- Use short, natural language captions. These tend to work well in just a few hundred steps.

- Stacking LoRAs:

- Stacking a concept and character LoRA gives better results than combined training.

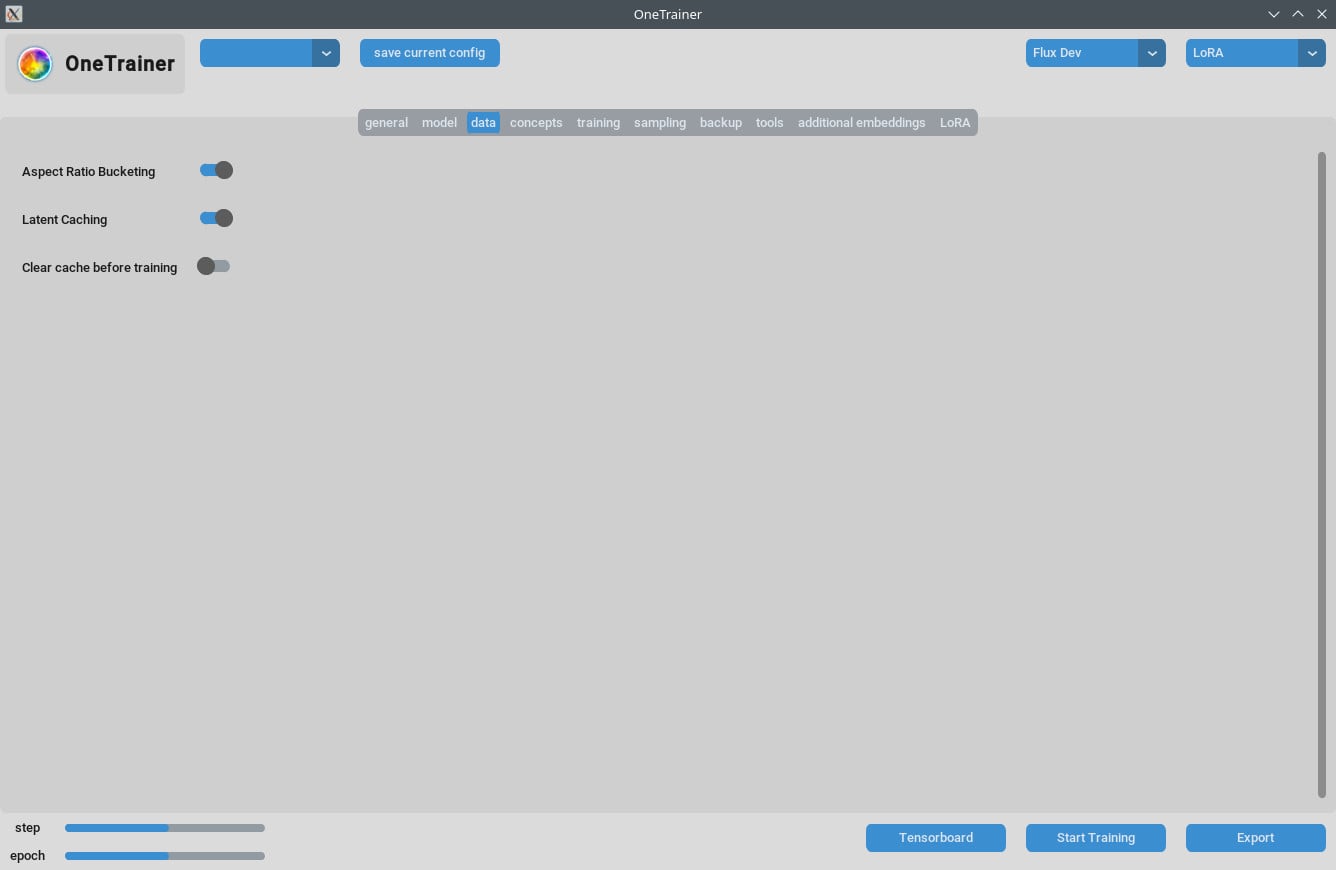

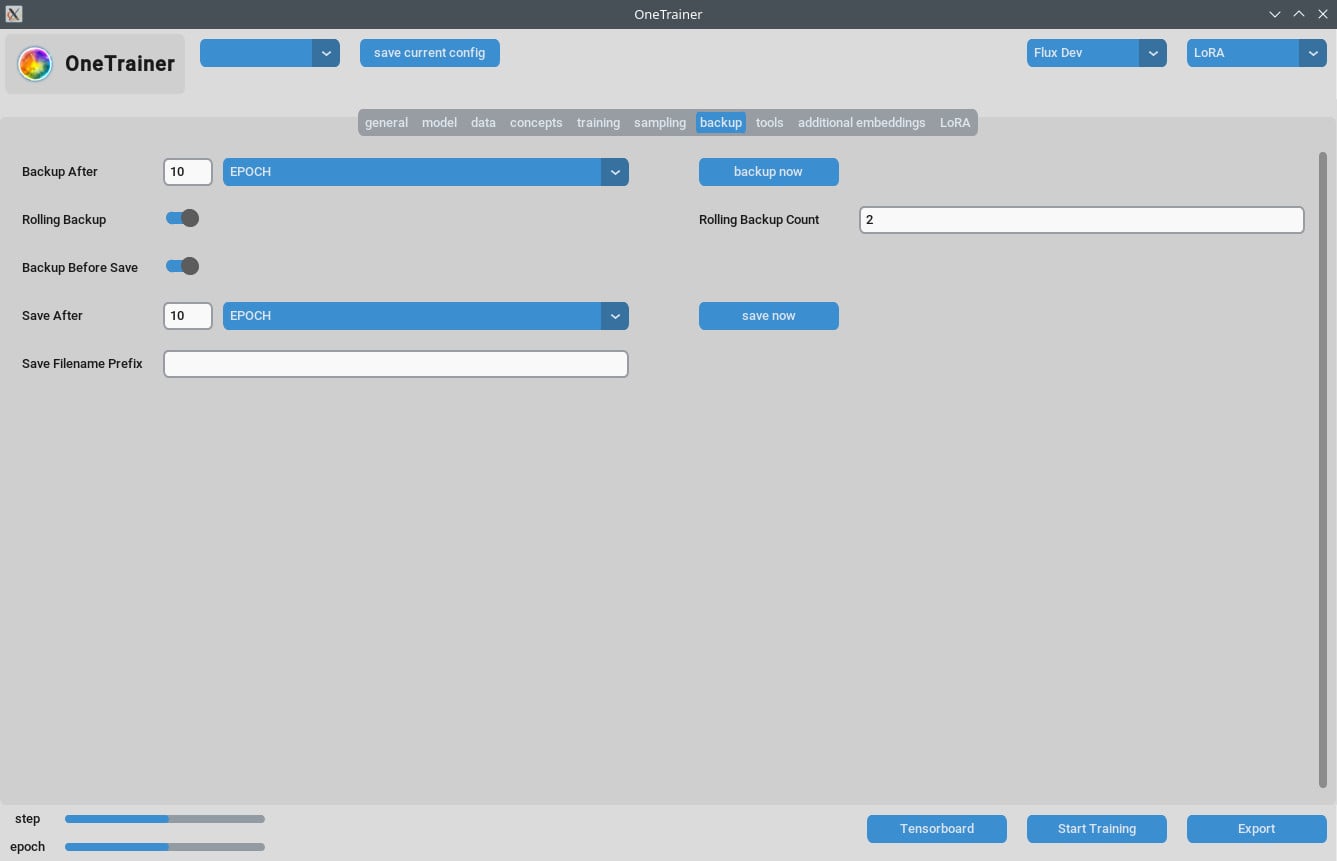

Captions and Data Management

- Organize your training data carefully. The number of epochs depends on the complexity of your data.

- Short, precise captions can significantly improve training efficiency.

FAQs

Q1: Can I use OneTrainer for models other than Flux.1?

Yes, OneTrainer supports SD 1.5, SDXL, and more. Settings will vary based on the model.

Q2: Does OneTrainer automatically use the concept name as a trigger word?

Yes, the concept name can act as a trigger word. Ensure it’s meaningful to your project.

Q3: How can I manage VRAM effectively during training?

Set Gradient Checkpointing to CPU_OFFLOAD. This setting helps reduce VRAM usage without significantly impacting speed.

Q4: What’s the impact of using NF4 vs. full precision layers?

NF4 reduces VRAM usage but may slightly decrease quality. Full precision layers maintain quality but require more VRAM.

Q5: How do I decrease the size of my LoRA model?

You can reduce Rank and Alpha values or set the LoRA weight data type to bfloat16. This reduces size but may affect quality.

Q6: Can OneTrainer handle multi-resolution training?

Yes, OneTrainer supports multi-resolution training. Follow the guidelines in the OneTrainer wiki for setting it up.

Q7: My images appear as pink static when using DoRA. What should I do?

Check your attention layer settings. Avoid using "full" for the attention layers as this might be causing the issue.

Q8: How do I handle multiple subjects in OneTrainer?

Use balanced training by setting different repeat values for different subjects. Organize data carefully to ensure both subjects get equal training.

Q9: Is there an equivalent to 'split mode' in OneTrainer?

OneTrainer does not have a 'split mode'. Instead, use settings like CPU_OFFLOAD for Gradient Checkpointing to manage VRAM more effectively.

Q10: Can OneTrainer settings be adjusted for better quality at higher VRAM usage?

Yes, increase the resolution and adjust data types and gradient checkpointing settings to enhance quality.

This guide should provide all necessary steps, settings, and troubleshooting tips for effectively using OneTrainer with Flux AI models. Happy training!